Product Pricing Scraper

System Requirements Specification (SRS)

Project Overview

A backend service that scrapes product information from e-commerce sites (Flipkart, Swiggy) based on product URLs and location data (pincode). The system verifies if the displayed price complies with Minimum Advertised Price (MAP) policies and returns structured data for monitoring.

Inputs

- Product URL: Valid URL from supported platforms (Flipkart, Swiggy)

- Pincode: Location identifier for region-specific pricing

- MAP (Optional): Minimum Advertised Price threshold for compliance checking

Outputs

{

"product_name": "Product XYZ",

"image_url": "https://example.com/image.jpg",

"current_price": 123.45,

"map_price": 120.00,

"status": "GREEN", // GREEN if price ≥ MAP, RED if price < MAP

"city": "Meerut",

"scrape_time_ms": 312

}

Key Constraints & Requirements

- Response Time: Must return data in ≤ 5 seconds

- Fault Tolerance: Must handle website structure changes and temporary failures

- Politeness: Respect robots.txt and implement rate limiting

- Location Handling: Must handle location-specific popups and price variations

- Scalability: Should scale to handle multiple concurrent requests

System Architecture

High-Level Design (V1)

Component Details

1. API Gateway

- Technology: FastAPI (Python) or Express.js (TypeScript)

- Responsibility: Request validation, rate limiting, response formatting

2. Web Scraper Service

- Technology: Playwright (Python/TypeScript) or Puppeteer (TypeScript)

- Responsibility: Website detection, scraping orchestration, price analysis

3. Browser Automation

- Technology: Playwright/Puppeteer with advanced stealth configuration

- Responsibility: Handle location popups, navigate websites, extract data

- Browser Mode: Headful state testing before production deployment

Detailed Data Flow

Optimizations for Response Time (≤ 5s)

1. Parallel Processing with Rate Limiting

- Implement smart parallel processing while respecting IP-based rate limits

- Balance concurrent requests to avoid detection

- Use domain-specific request patterns that mimic natural user behavior

2. Headful State Testing

- Develop in headful browser mode to observe website behavior

- Verify stealth techniques are working properly

- Create automated visual verification of critical scraping paths

- Deploy to headless in production with proven configurations

3. Browser Fingerprinting Evasion

-

Configure highly specific browser fingerprints:

// Sample fingerprint configuration const browser = await playwright.chromium.launch({ headless: false, args: [ '--window-size=1920,1080', '--user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36' ] }); // Set viewport precise dimensions await page.setViewportSize({ width: 1920, height: 1080 }); -

Implement consistent cookie and local storage handling

-

Set custom HTTP headers that match legitimate browsers

4. Anti-Bot Detection Measures

- Implement natural scrolling behaviors with variable speeds

- Add random mouse movements and realistic interaction patterns

- Wait for proper page rendering before extracting data

- Investigate and target rendered CSS IDs instead of static selectors

5. Fallback Mechanisms

- When page reloads unexpectedly, implement progressive interaction strategy

- Create natural scrolling patterns before accessing target elements

- Implement user-like interactions to bypass bot detection

- Use both rendered CSS and DOM structure for element identification

Website-Specific Scraping Strategies

Flipkart Scraping Strategy

-

Location Handling:

- Reverse engineer cookie mechanism:

// Sample cookie implementation await page.evaluate(() => { document.cookie = `SN=%7B%22pincode%22%3A%22${PIN}%22%7D; path=/; domain=.flipkart.com`; localStorage.setItem('pincode', PIN); });- Intercept location popup and inject pincode naturally

-

DOM Selectors:

Product Name: div._1AtVbE h1 span, .B_NuCI Price: div._30jeq3._16Jk6d, ._30jeq3 Image: img._396cs4._2amPTt -

Anti-Scraping Bypass:

- Implement proper user agent rotation with consistent device profiles

- Use natural timing between user actions (variable 500-2000ms)

- Implement full browser fingerprinting to avoid detection

Swiggy Scraping Strategy - Primary Focus

-

API-First Approach:

- Use Swiggy's internal APIs directly:

https://www.swiggy.com/api/instamart/item/[ITEM_ID]- Extract item IDs from URLs or search functionality

- Maintain proper authentication headers from browser session

-

Location Handling:

- Reverse engineer session establishment:

// Sample implementation for location setting const locationPayload = { lat: 28.6139, // Example latitude lng: 77.2090, // Example longitude pincode: "110001" }; await page.evaluate((data) => { localStorage.setItem('__SWIGGY_LOCATION__', JSON.stringify(data)); }, locationPayload); -

DOM Selectors (Fallback):

Product Name: div.styles_container__3pZflz h3 Price: div.rupee Image: img.styles_itemImage__3CsDL

Error Handling & Monitoring

Critical Monitoring Focus

- Implement comprehensive logging for all scraping operations

- Track success/failure rates by domain, selector, and time of day

- Create real-time alerts for pattern changes that affect multiple requests

- Build daily automated tests that verify critical selectors still work

Key Metrics to Monitor

- Time-to-first-byte for initial page load

- DOM ready event timing

- Total scraping duration per site

- Element selection success rates

- Bot detection trigger points

Politeness & Ethics Considerations

Rate Limiting (Low Priority)

- Implement domain-specific rate limiting based on site policies

- Use jittered delays between requests that mimic normal user patterns

- Consider time-of-day patterns in request frequency

Legal Considerations

- Review Terms of Service for each target site

- Consider using commercial scraping APIs as alternatives

- Implement user-agent identification for transparency

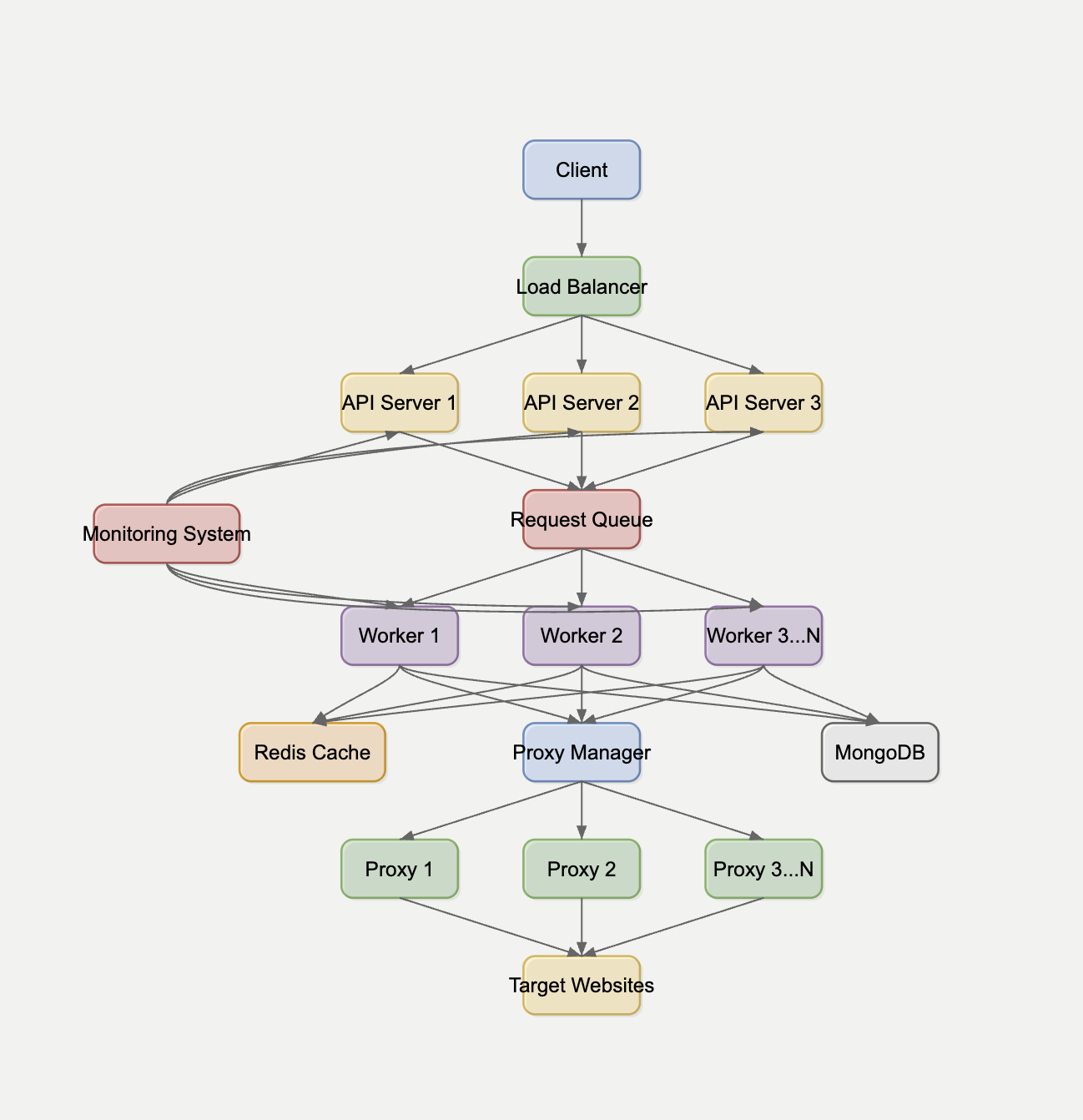

Scalable Architecture (V2)

V2 Enhancements

-

Queue-Based Architecture

- Decouple request handling from scraping operations

- Use Kafka/RabbitMQ/SQS for reliable message delivery

- Implement priority queuing for premium customers

-

Distributed Scraping

- Horizontally scale worker nodes

- Implement consistent hashing for domain-specific worker assignment

- Use containerization (Docker + Kubernetes) for easy scaling

-

Proxy Management

- Rotate through residential and datacenter proxies

- Implement proxy health checking and scoring

- Domain-specific proxy assignment based on success rates

-

MongoDB for Historical Data

- Store historical price data for trend analysis

- Implement TTL indexes for automatic data cleanup

- Use time-series collections for efficient price history queries

Commercial Scraping API Options

If building an in-house scraper proves challenging, consider these commercial alternatives:

-

ScraperAPI (https://www.scraperapi.com/)

- Pros: Handles proxies, CAPTCHAs, and browser rendering

- Cons: Higher latency, less control over location handling

- Pricing: Pay-per-request model starting at $29/month for 250,000 requests

-

BrightData (https://brightdata.com/)

- Pros: Advanced proxy network with residential IPs, detailed location targeting

- Cons: Higher cost, complex integration

- Pricing: Custom pricing based on usage patterns

-

Zyte (formerly ScrapingHub) (https://www.zyte.com/)

- Pros: Enterprise-grade, high reliability, good support

- Cons: Higher cost, potential overkill for simpler needs

- Pricing: Custom pricing, typically more expensive than alternatives

Technology Recommendations

For Python Implementation

-

Web Framework: FastAPI

- Async support for handling concurrent requests

- Built-in request validation via Pydantic

- Easy OpenAPI documentation

-

Browser Automation: Playwright

- Multi-browser support (Chromium, Firefox, WebKit)

- Better stealth capabilities than Selenium

- Good handling of modern web applications

-

Data Storage: Motor (Async MongoDB)

- Non-blocking MongoDB operations

- Good support for time-series data

For TypeScript Implementation

-

Web Framework: NestJS

- Enterprise-ready structure with modular design

- Strong typing with TypeScript

- Good testing support

-

Browser Automation: Puppeteer/Playwright

- Native TypeScript support

- Good performance with Chromium

-

Data Storage: Mongoose

- TypeScript support with schemas

- Rich query capabilities

Conclusion

The proposed system provides a robust solution for scraping product pricing data from e-commerce sites while maintaining compliance with MAP policies. By focusing on advanced browser fingerprinting, stealth techniques, and API-first approaches (especially for Swiggy), the system can achieve the required response time of ≤5 seconds while remaining resilient to anti-bot measures.

For the initial implementation, focus should be placed on the Swiggy API-first approach as it offers the most reliable and efficient path to data extraction. The system should emphasize robust error monitoring and detection of website structure changes to maintain operational reliability.

Development should begin with headful browser testing to fully understand site behaviors before moving to production headless deployment, with comprehensive fingerprinting and anti-detection measures in place. Continuous monitoring of scraping success rates and selector reliability will ensure the system remains effective as target websites evolve.